« IA et cybersécurité/en » : différence entre les versions

(Page créée avec « * The '''security assessment,''' often identified with the work of '' Red Teams,'' performing auditing, penetration testing, and organizational compromise activities; ») |

(Page créée avec « * Standardize the anonymization of data collection, storage and transmission encryption. * Implement information leak detection scenarios for the entire data pipeline * Provide a specific, isolated training environment with restricted access to libraries for those handling the data sets. * Regularly validate the security level of open-source libraries and components used in training and validation environments. * Build adversarial datasets with identified risk sc... ») |

||

| (19 versions intermédiaires par le même utilisateur non affichées) | |||

| Ligne 53 : | Ligne 53 : | ||

* The '''security assessment,''' often identified with the work of '' Red Teams,'' performing auditing, penetration testing, and organizational compromise activities; | * The '''security assessment,''' often identified with the work of '' Red Teams,'' performing auditing, penetration testing, and organizational compromise activities; | ||

* Application security, including identity and access management, and application protection (Security by Design, SDLC, DevSecOps, etc.); | |||

* | * Infrastructure security, including the implementation of infrastructure cybersecurity solutions (FW, IPS, EDR, Proxy, Directories, etc.) required for in-depth information system security; | ||

* | * Cyber defense activities, often identified with the work of Blue Teams, ensuring the operational security of organizations' Information Systems. | ||

* | |||

==== Security objectives ==== | |||

=== | Security objectives aim to determine the activities to be implemented in order to control cybersecurity risks within organizations. These are divided into 5 types according to the NIST framework: | ||

* IDENTIFY'' with the aim of identifying, evaluating and implementing the appropriate organization to control the information system and deal with risks; | |||

* '' | |||

* '''PROTECT''' with the aim of implementing the activities required to protect and maintain the security of information systems; | |||

* ''' | |||

* DETECT to identify and qualify cybersecurity incidents; | |||

* | |||

* '''RESPOND''' with the aim of dealing with cybersecurity incidents: stopping or containing the attack, adapting the security policy or its implementation ; | |||

* ''' | |||

* '''RESET''' with the aim of returning to a normal operating state following a cybersecurity incident. | |||

* ''' | |||

==== Case studies presented by members of the Artificial Intelligence and Cybersecurity Working Group ==== | |||

= | |||

* [[Confidentiality Classification|Cas d'usage de Classification des données]] | * [[Confidentiality Classification|Cas d'usage de Classification des données]] | ||

* [[Machine Learning contre DDoS|Cas d'usage d'utilisation du Machine Learning dans la mitigation d'attaque DDoS]] | * [[Machine Learning contre DDoS|Cas d'usage d'utilisation du Machine Learning dans la mitigation d'attaque DDoS]] | ||

| Ligne 98 : | Ligne 81 : | ||

* [[Contrôler les élévations de privilèges irrégulières|Cas d'usage de contrôle des élévations de privilèges irrégulières]] | * [[Contrôler les élévations de privilèges irrégulières|Cas d'usage de contrôle des élévations de privilèges irrégulières]] | ||

* [[WIFI Pwnagotchi|Cas d'usage d'utilisation du pwnagotchi pour casser les WIFI]] | * [[WIFI Pwnagotchi|Cas d'usage d'utilisation du pwnagotchi pour casser les WIFI]] | ||

== How to secure applications using artificial intelligence systems == | |||

==== An AI system as seen by cybersecurity experts ==== | |||

= | The Working Group has identified only the common building blocks required for any AI project, to provide readers with a succinct, pragmatic vision. | ||

An Artificial Intelligence system can be represented as a pipeline made up of 4 key stages: | |||

[[Fichier:Modélisation d un systeme IA.png|centré|vignette|1000x1000px|Modélisation d'un pipeline d'Intelligence Artificielle]] | [[Fichier:Modélisation d un systeme IA.png|centré|vignette|1000x1000px|Modélisation d'un pipeline d'Intelligence Artificielle]] | ||

* Exploration is a set of technical components used to analyze data in order to understand its meaning and determine the axes of analysis for the following phases; | |||

* | * Training is a set of technical components for modeling data and training an AI model; | ||

* | * Evaluation is a set of technical components used to validate the relevance of a trained model in relation to the modeling objectives; | ||

* | * Production is a set of technical components enabling the model to infer results from production data. | ||

* | |||

==== Applying EBIOS RM to an Artificial Intelligence system ==== | |||

= | |||

[[Fichier:GT IA & Cyber - Les questions à se poser.png|gauche|vignette|1000x1000px]] | [[Fichier:GT IA & Cyber - Les questions à se poser.png|gauche|vignette|1000x1000px]] | ||

Attacks against ML can be grouped into 3 main families (poisoning, oracle and evasion) and cover the entire lifecycle of an ML-based system. | |||

[[Fichier:Attaques sur un systeme IA.png|sans_cadre|1000x1000px]] | [[Fichier:Attaques sur un systeme IA.png|sans_cadre|1000x1000px]] | ||

The working group's research identified 5 different attack patterns applicable to any Artificial Intelligence system: | |||

* Training data poisoning attack (targeted or untargeted) | |||

* | * Data supply attack | ||

* | * Attack on library supply chain | ||

* | * Oracle attack on production model | ||

* | * Evasion attack on production model | ||

* | |||

==== Practical solutions to reduce the risk of system compromise ==== | |||

= | The 4 best practices to put in place to limit the risks associated with AI systems are : | ||

* Set up an ethics and risk committee to select the use cases for artificial intelligence that are relevant to the company and the authorized data. | |||

* | * Inform artificial intelligence and data teams of their rights and obligations when handling data, and the associated risks. | ||

* | * Train artificial intelligence and data teams in best practices for secure data handling (anonymization, encryption, etc.), distributed learning techniques and secure development. | ||

* | * Define for each project: data that cannot be transmitted to the system, acceptable system deviation/relevance limits and data theft signals (query volume, targeted queries on critical data, etc.). | ||

* | |||

Measures specific to development, training, validation and production environments: | |||

* Standardize the anonymization of data collection, storage and transmission encryption. | |||

* | * Implement information leak detection scenarios for the entire data pipeline | ||

* | * Provide a specific, isolated training environment with restricted access to libraries for those handling the data sets. | ||

* | * Regularly validate the security level of open-source libraries and components used in training and validation environments. | ||

* | * Build adversarial datasets with identified risk scenarios to validate model changes | ||

* | * Launch models in production in secure enclaves with limited execution rights. | ||

* | * Monitor systems for deviations | ||

* | |||

{{PageSubHeader Groupe de travail | {{PageSubHeader Groupe de travail | ||

|UseCase=Confidentiality Classification# Contrôler les élévations de privilèges irrégulières# Détection de démissionnaires pressentis# Détection d’intrusions et de man-in-the middle dans les réseaux OT et IoT# Détection d’événements de sécurité# Machine Learning contre DDoS# Protection de la marque, lutte contre le typo-squatting# User behavior anomaly detection# WIFI Pwnagotchi | |UseCase=Confidentiality Classification# Contrôler les élévations de privilèges irrégulières# Détection de démissionnaires pressentis# Détection d’intrusions et de man-in-the middle dans les réseaux OT et IoT# Détection d’événements de sécurité# Machine Learning contre DDoS# Protection de la marque, lutte contre le typo-squatting# User behavior anomaly detection# WIFI Pwnagotchi | ||

}} | }} | ||

Dernière version du 5 décembre 2023 à 15:38

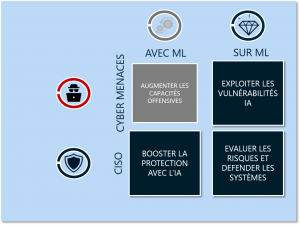

Analyser les domaines de la cybersécurité où l’Intelligence Artificielle permet des avancées

Catégorie : Groupe de travail Cycle : Cycle 2 Statut : En cours

Date de début : mai 2021

Date de fin : décembre 2023

Working Group objectives and deliverables

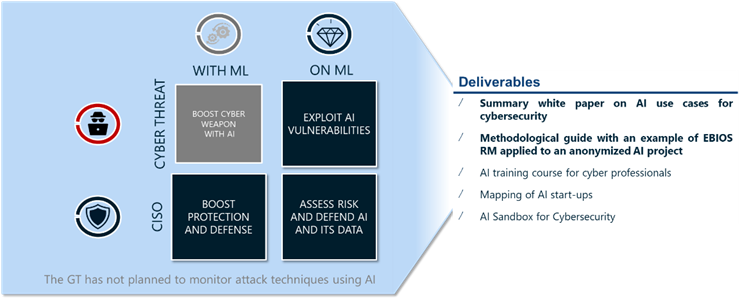

The mission of the Working Group, launched as part of Cycle 2 of the Cyber Campus, is to analyze the areas in which cybersecurity and artificial intelligence intersect.

Initial objectives

- Catalog the potential uses of AI for cybersecurity

- Create a CYLVIA sandbox environment enabling Cyber Campus members to experiment and evolve in a restricted environment.

- Create AI training courses for cybersecurity professionals at various levels.

- Identify and map startups specializing in AI solutions for cybersecurity.

- Identify cyberthreats and possible mitigations on AI application systems

The security of intelligent systems and their uses in cybersecurity, state of knowledge and skills

Artificial Intelligence systems are becoming more and more widespread in modern information technology, and have made many advances in recent years. With a view to preparing the next generations of applications and defense systems against cyber threats, the Cyber Campus, through the Artificial Intelligence and Cybersecurity Working Group, is highlighting the main uses of AI to benefit cybersecurity, the limits identified as well as the risks and main measures recommended for the security of these systems.

Which players are involved in Artificial Intelligence projects?

Artificial Intelligence projects involve a wide range of players in order to make the most of the data and respond to the use case identified in advance. Identifying key resources is a major challenge for any cybersecurity expert at the start of a project, and a succinct description of roles and responsibilities helps to quickly identify the stakeholders. The various phases of an AI project, including the development, production launch and monitoring of an AI application, involve many players, some of whom are data science specialists, others not. The diagram below shows the four main families of players identified:

- "Business" profiles, in charge of the use case and its validation in relation to the company's objectives;

- Data science specialists, in charge of implementing Artificial Intelligence techniques to meet the needs identified by the "business";

- IT profiles, in charge of developing and putting into production the use cases designed by the data science specialists;

- Risk profiles, in charge of monitoring and ensuring compliance with internal policies and external regulations.

It should be noted that each company may have slightly different names for these profiles, and may even divide the roles between different players in different ways. Some of these profiles are cross-functional and work on numerous projects (business sponsor, production platform administrator, data architect and risk profiles), while others are dedicated to a single project and form a project team. Risk profiles must therefore work alongside AI profiles in mixed project teams.

The Working Group also recommends the creation of two complementary positions, référents sécurité en Intelligence Artificielle and Facilitateurs en Intelligence Artificielle.

What contribution can artificial intelligence make to cybersecurity?

Intelligence Artificielle, as a scientific field, has benefited from numerous advances over the last few years, notably thanks to the pooling of techniques, capabilities and data enabling the use of statistical Machine Learning approaches to the detriment of deterministic Expert Systems approaches. This approach enables generalization and brings benefits when a significant amount of data is pooled to limit statistical effects. As a result, cybersecurity research is naturally focused on the following areas:

- Identification and localization of corporate information, starting with its classification, and the application of dynamic policies;

- Protection by analyzing weak signals and common behaviors, AI-based systems enable security policies and mechanisms to be configured as effectively as possible;

- Detection by analyzing the slightest variation in behaviors and changes to infrastructures to dynamically build detection scenarios;

- 'Recovery through decision support, with detailed knowledge of the information system, to establish the response and recovery strategy associated with a cybersecurity incident.

Based on the challenges and experiments carried out in a number of contexts, the Working Group has identified a set of cybersecurity use cases that can be accelerated or enhanced by the use of Artificial Intelligence. To this end, a framework has been defined to classify and enrich the use cases.

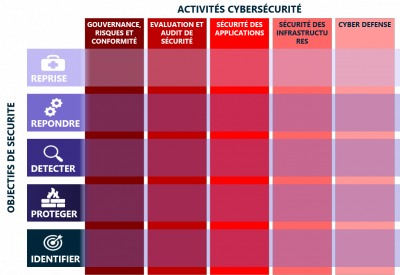

This Use Case Classification Framework is an initiative of the Working Group: it is based on guides and standards in the field, and is intended to be a "practical" way of simply categorizing use cases and encouraging the identification of new ones. In addition to helping to organize knowledge, this framework has been designed to facilitate sharing with international entities. It is built around three axes:

- categorization of use cases according to the activities of cybersecurity teams, following a typical cybersecurity organization breakdown;

- categorization of the use case according to the security objectives it seeks to address. The NIST Cybersecurity Framework is used here;

- reconciling the use case with the attack treatment of MITRE ATT&CK or the implementation of defense elements of MITRE D3FEND.

Cybersecurity activities

The categorization of use cases in relation to the activities of cybersecurity teams has resulted in 5 main categories:

- Risk and compliance management, including all activities relating to the definition, treatment and management of risks and regulations applicable to organizations;

- The security assessment, often identified with the work of Red Teams, performing auditing, penetration testing, and organizational compromise activities;

- Application security, including identity and access management, and application protection (Security by Design, SDLC, DevSecOps, etc.);

- Infrastructure security, including the implementation of infrastructure cybersecurity solutions (FW, IPS, EDR, Proxy, Directories, etc.) required for in-depth information system security;

- Cyber defense activities, often identified with the work of Blue Teams, ensuring the operational security of organizations' Information Systems.

Security objectives

Security objectives aim to determine the activities to be implemented in order to control cybersecurity risks within organizations. These are divided into 5 types according to the NIST framework:

- IDENTIFY with the aim of identifying, evaluating and implementing the appropriate organization to control the information system and deal with risks;

- PROTECT with the aim of implementing the activities required to protect and maintain the security of information systems;

- DETECT to identify and qualify cybersecurity incidents;

- RESPOND with the aim of dealing with cybersecurity incidents: stopping or containing the attack, adapting the security policy or its implementation ;

- RESET with the aim of returning to a normal operating state following a cybersecurity incident.

Case studies presented by members of the Artificial Intelligence and Cybersecurity Working Group

- Cas d'usage de Classification des données

- Cas d'usage d'utilisation du Machine Learning dans la mitigation d'attaque DDoS

- Cas d'usage de protection de la marque, lutte contre le typo-squatting

- Cas d'usage d'identification de démissionnaires pressentis

- Cas d'usage de User and Endpoint Behavior Analysis dans un SOC

- Cas d'usage de détection de man-in-the middle dans les réseaux OT et IoT

- Cas d'usage de User Behavior Anomaly Detection pour les comptes compromis

- Cas d'usage de contrôle des élévations de privilèges irrégulières

- Cas d'usage d'utilisation du pwnagotchi pour casser les WIFI

How to secure applications using artificial intelligence systems

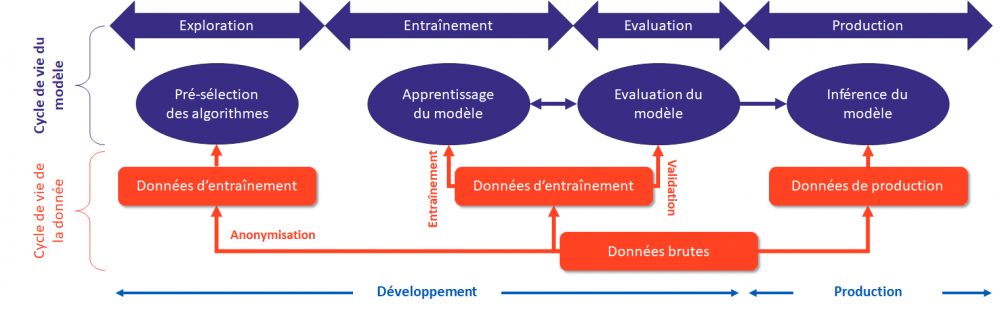

An AI system as seen by cybersecurity experts

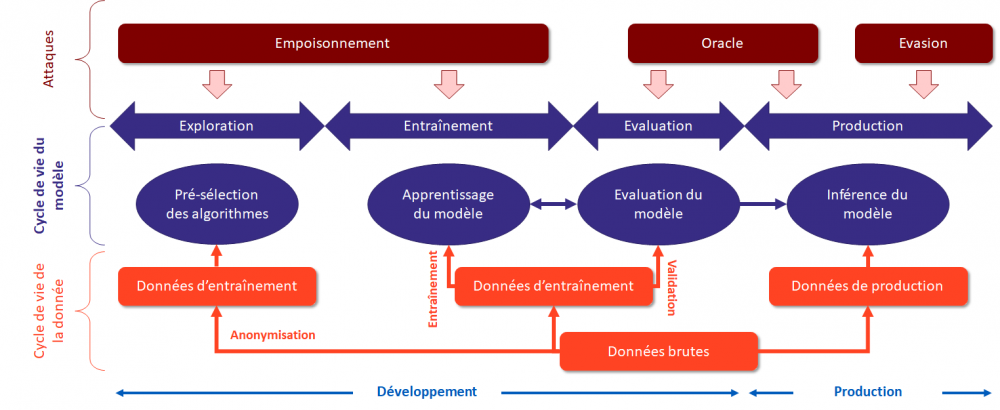

The Working Group has identified only the common building blocks required for any AI project, to provide readers with a succinct, pragmatic vision.

An Artificial Intelligence system can be represented as a pipeline made up of 4 key stages:

- Exploration is a set of technical components used to analyze data in order to understand its meaning and determine the axes of analysis for the following phases;

- Training is a set of technical components for modeling data and training an AI model;

- Evaluation is a set of technical components used to validate the relevance of a trained model in relation to the modeling objectives;

- Production is a set of technical components enabling the model to infer results from production data.

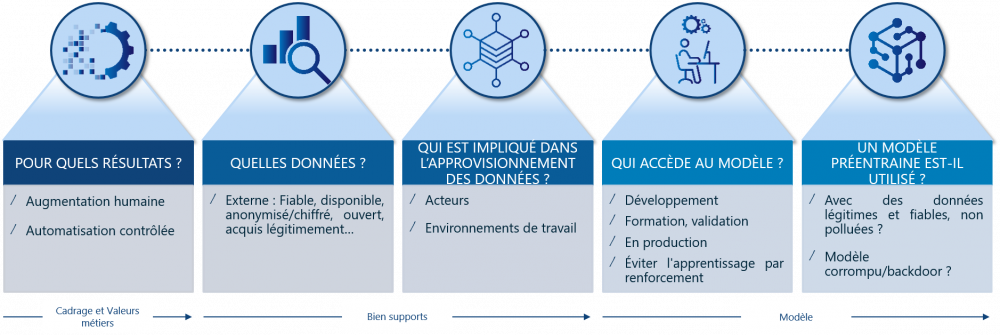

Applying EBIOS RM to an Artificial Intelligence system

Attacks against ML can be grouped into 3 main families (poisoning, oracle and evasion) and cover the entire lifecycle of an ML-based system.

The working group's research identified 5 different attack patterns applicable to any Artificial Intelligence system:

- Training data poisoning attack (targeted or untargeted)

- Data supply attack

- Attack on library supply chain

- Oracle attack on production model

- Evasion attack on production model

Practical solutions to reduce the risk of system compromise

The 4 best practices to put in place to limit the risks associated with AI systems are :

- Set up an ethics and risk committee to select the use cases for artificial intelligence that are relevant to the company and the authorized data.

- Inform artificial intelligence and data teams of their rights and obligations when handling data, and the associated risks.

- Train artificial intelligence and data teams in best practices for secure data handling (anonymization, encryption, etc.), distributed learning techniques and secure development.

- Define for each project: data that cannot be transmitted to the system, acceptable system deviation/relevance limits and data theft signals (query volume, targeted queries on critical data, etc.).

Measures specific to development, training, validation and production environments:

- Standardize the anonymization of data collection, storage and transmission encryption.

- Implement information leak detection scenarios for the entire data pipeline

- Provide a specific, isolated training environment with restricted access to libraries for those handling the data sets.

- Regularly validate the security level of open-source libraries and components used in training and validation environments.

- Build adversarial datasets with identified risk scenarios to validate model changes

- Launch models in production in secure enclaves with limited execution rights.

- Monitor systems for deviations

Communs

| Appliquer EBIOS RM à un système IA | Application du guide « méthodologie EBIOS RM » publié par l’ANSSI pour évaluer un système à base d’intelligence artificielle. |

| Facilitateur en Intelligence Artificielle | Un facilitateur IA est un correspondant cyber disposant de connaissances pratiques en cybersécurité et en Intelligence Artificielle. |

| L'intelligence artificielle en cybersécurité | Identifie et analyse certains cas d'usage où l’Intelligence Artificielle permet des avancées pour la cybersécurité. |

| Référent sécurité en Intelligence Artificielle | Le référent cybersécurité pour les data scientists est intégré dans leur environnement de travail. Il est leur point de contact privilégié pour assurer concrètement la prise en compte de la cybersécurité dans les projets IA qu’ils développent. |

| Réglementation de l'IA | Synthèse de la règlemention européen dite « Artificial Intelligence Act ». |

| UC3 : Brand protection, fight against typo-squatting | Développement du Use Case pédagogique "UC 3 : Lutte contre le typosquatting" dans le cadre du GT IA et Cyber |

| UC5 : Machine Learning vs DDoS | Développement du Use Case pédagogique "Machine Learning vs Attaque DDoS" dans le cadre du GT IA et Cyber |

| UC7 : Suspicious security events detection | Développement du Use Case pédagogique "Suspicious security events detection" dans le cadre du GT IA et Cyber |

Cas d'usage

| Statut | ||

|---|---|---|

| Confidentiality Classification | ||

| Lutte contre le typo-squatting |